Project Overview: AI-Powered Shell Script Website Monitor

Goal: Create a script that monitors a list of websites, sends alerts on failure, keeps a log, and uses AI to provide intelligent analysis of downtime.

Learning Objectives:

Shell Scripting Fundamentals: Variables, loops, conditional statements (if/else), functions.

Advanced Shell Scripting: Reading from files, error handling, command-line tools (curl, date, grep), process substitution, sourcing external files.

DevOps Best Practices:

Configuration as Code: Separating configuration (websites.conf) from logic (monitor.sh).

Secrets Management: Using a .env file for API keys and tokens (never hardcode secrets!).

Automation: Scheduling the script with cron.

Logging: Maintaining a clear record of events.

Alerting: Integrating with modern tools like Slack or Telegram.

AI Integration: Using curl to interact with the OpenAI API (ChatGPT) to get human-readable insights into failures.

Prerequisites

A Linux/macOS Environment: A command line is essential.

curl: A command-line tool for transferring data with URLs. (Usually pre-installed).

jq: A lightweight command-line JSON processor. We’ll need this to parse the AI’s response.

Install it: sudo apt-get install jq (Debian/Ubuntu) or sudo yum install jq (CentOS/RHEL) or brew install jq (macOS).

(Optional) A Slack Webhook URL or Telegram Bot Token: For sending alerts.

(Optional) An OpenAI API Key: To enable the AI analysis feature. You can get one from the OpenAI Platform.

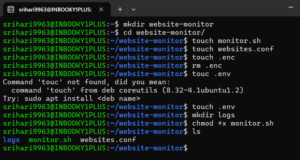

Phase 1: Project Structure

A well-structured project is a DevOps best practice. Let’s create the directory and files.

# Create the main project directory

mkdir website-monitor

cd website-monitor

# Create the core script file

touch monitor.sh

# Create the configuration file for websites

touch websites.conf

# Create a file for our secrets (environment variables)

touch .env

# Create a directory for logs

mkdir logs

# Make our script executable

chmod +x monitor.sh

Our project structure should now look like this:

website-monitor/

├── monitor.sh # The main script

├── websites.conf # List of websites to monitor

├── .env # Secrets (API keys, webhook URLs)

└── logs/ # Directory for log filesPhase 2: The Core Monitoring Logic (v1)

Let’s start by building the basic functionality inside monitor.sh.

Step 2.1: Populate Configuration Files

websites.conf: Add the URLs you want to monitor, one per line. Include one that is likely to fail for testing purposes.

https://google.com https://github.com https://httpstat.us/503 # This site will return a 503 error for testing https://non-existent-domain-12345.com # This will fail to resolve.env: Add your secret tokens here. IMPORTANT: Add .env to your .gitignore file if you use Git!

#Alerting Configuration # Choose one: "slack", "telegram", "email", or "log" ALERT_METHOD="email" # Email configuration EMAIL_TO="sriharimalapati@gmail.com" EMAIL_FROM="srihari9963@INBOOKY1PLUS" # OpenAI Configuration (Optional) # OPENAI_API_KEY="sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

Step 2.2: The Initial monitor.sh Script

Let’s write the first version of our script. This version will read the config, check each site, and log the status.

#!/bin/bash

# Configuration

CONFIG_FILE="websites.conf"

LOG_FILE="logs/monitor.log"

ENV_FILE=".env" # NEW: Define the .env file

# Load Environment Variables

if [ -f "${ENV_FILE}" ]; then

export $(grep -v '^#' ${ENV_FILE} | xargs)

else

echo "Error: .env file not found at ${ENV_FILE}"

exit 1

fi

# Functions

# Function to log messages with a timestamp

log() {

echo "$(date '+%Y-%m-%d %H:%M:%S') - $1" >> "${LOG_FILE}"

}

# NEW: Function to send an email alert

send_email_alert() {

local url=$1

local reason=$2

local subject="Website Down Alert: ${url}"

local body="The website ${url} is currently down.

Reason: ${reason}

Timestamp: $(date '+%Y-%m-%d %H:%M:%S')

Please investigate the issue.

"

# Use the 'mail' command to send the email

echo "${body}" | mail -s "${subject}" "${EMAIL_TO}"

log "ALERT: Email alert sent to ${EMAIL_TO} for ${url}."

}

# NEW: Central function to handle sending alerts based on ALERT_METHOD

send_alert() {

local url=$1

local reason=$2

case "${ALERT_METHOD}" in

"email")

send_email_alert "$url" "$reason"

;;

"log")

# For 'log' method, we just log it as a critical failure

log "ALERT_TRIGGERED (method=log): ${url} is DOWN. Reason: ${reason}"

;;

*)

log "WARNING: Unknown ALERT_METHOD '${ALERT_METHOD}'. Defaulting to log."

log "ALERT_TRIGGERED (method=log): ${url} is DOWN. Reason: ${reason}"

;;

esac

}

# Function to check a single website

check_site() {

local url=$1

http_code=$(curl -s -o /dev/null -w "%{http_code}" --connect-timeout 5 --max-time 10 "${url}")

curl_exit_code=$?

if [ $curl_exit_code -ne 0 ]; then

log "ERROR: Failed to connect to ${url}. cURL exit code: ${curl_exit_code}."

# MODIFIED: Call the central alert function

send_alert "${url}" "Connection Error. cURL exit code: ${curl_exit_code}."

echo "Site ${url} is DOWN (Connection Error)."

elif [ "${http_code}" -ge 200 ] && [ "${http_code}" -lt 400 ]; then

log "SUCCESS: ${url} is UP. Status code: ${http_code}."

echo "Site ${url} is UP."

else

log "FAILURE: ${url} is DOWN. Status code: ${http_code}."

# MODIFIED: Call the central alert function

send_alert "${url}" "HTTP Status Code: ${http_code}."

echo "Site ${url} is DOWN (HTTP ${http_code})."

fi

}

# Main Script Logic

# Check if the configuration file exists

if [ ! -f "${CONFIG_FILE}" ]; then

echo "Error: Configuration file not found at ${CONFIG_FILE}"

exit 1

fi

log "Monitor script started"

# Read each line from the config file and check the site

while IFS= read -r site || [[ -n "$site" ]]; do

if [[ -n "$site" ]]; then # Ensure the line is not empty

check_site "$site"

fi

done < "${CONFIG_FILE}"

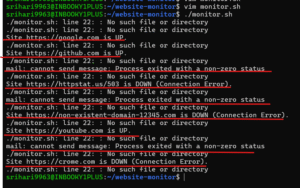

log "Monitor script finished"Run it:

./monitor.shExpected Output (on screen):

Site https://google.com is UP.

Site https://github.com is UP.

Site https://httpstat.us/503 is DOWN (HTTP 503).

Site https://non-existent-domain-12345.com is DOWN (Connection Error).

Check the log file: cat logs/monitor.log will show timestamped details.

Phase 3: Adding Robust Alerting

Now let’s make the alerts useful by integrating with Slack.

Update .env:

Uncomment and fill in your SLACK_WEBHOOK_URL and set ALERT_METHOD=”slack”.

Update monitor.sh:

We need a place to store the last known status of each website.

In your project directory, create a new folder called status:

mkdir statusWe will create a file inside this directory to track the status. You don’t need to create the file yourself; the script will do it automatically.

This is a significant upgrade to the script’s logic. We will add new functions to manage state and incorporate a retry loop. Carefully replace your existing monitor.sh with this new version.

#!/bin/bash

# Configuration

CONFIG_FILE="websites.conf"

LOG_FILE="logs/monitor.log"

ENV_FILE=".env"

STATE_FILE="status/status.log" # NEW: File to store the last known status

# Constants

MAX_RETRIES=3 # NEW: Number of times to retry a failed check

RETRY_DELAY=5 # NEW: Seconds to wait between retries

# Load Environment Variables

if [ -f "${ENV_FILE}" ]; then

export $(grep -v '^#' ${ENV_FILE} | xargs)

else

echo "Error: .env file not found at ${ENV_FILE}"

exit 1

fi

# Functions

# Function to log messages with a timestamp

log() {

echo "$(date '+%Y-%m-%d %H:%M:%S') - $1" >> "${LOG_FILE}"

}

# MODIFIED: Function to send an email alert (now handles different subjects)

send_email_alert() {

local url=$1

local reason=$2

local subject=$3 # NEW: Pass in the subject line

local body="Website: ${url}

Reason: ${reason}

Timestamp: $(date '+%Y-%m-%d %H:%M:%S')

"

echo "${body}" | mail -s "${subject}" "${EMAIL_TO}"

log "ALERT: Email alert with subject '${subject}' sent to ${EMAIL_TO} for ${url}."

}

# MODIFIED: Central function to handle sending alerts

send_alert() {

local url=$1

local reason=$2

local status=$3 # NEW: "DOWN" or "RECOVERY"

local subject=""

if [ "$status" == "DOWN" ]; then

subject="Website Down Alert: ${url}"

elif [ "$status" == "RECOVERY" ]; then

subject="Website Recovered: ${url}"

else

return # Do nothing if status is unknown

fi

case "${ALERT_METHOD}" in

"email")

send_email_alert "$url" "$reason" "$subject"

;;

"log")

log "ALERT_TRIGGERED (method=log): Status is ${status} for ${url}. Reason: ${reason}"

;;

*)

log "WARNING: Unknown ALERT_METHOD '${ALERT_METHOD}'. Defaulting to log."

log "ALERT_TRIGGERED (method=log): Status is ${status} for ${url}. Reason: ${reason}"

;;

esac

}

# NEW: Function to get the last known status of a site

get_last_status() {

local url=$1

if [ ! -f "${STATE_FILE}" ]; then

echo "UP" # Assume UP if state file doesn't exist yet

return

fi

# Find the line for the URL and get the status after the '='

grep "^${url}=" "${STATE_FILE}" | cut -d'=' -f2

}

# NEW: Function to update the status of a site in the state file

update_status() {

local url=$1

local status=$2 # "UP" or "DOWN"

# Create a temporary file

local temp_state_file

temp_state_file=$(mktemp)

# If the state file exists, remove the old entry for this URL

if [ -f "${STATE_FILE}" ]; then

grep -v "^${url}=" "${STATE_FILE}" > "${temp_state_file}"

fi

# Add the new status entry

echo "${url}=${status}" >> "${temp_state_file}"

# Replace the old state file with the new one

mv "${temp_state_file}" "${STATE_FILE}"

}

# HEAVILY MODIFIED: Function to check a single website

check_site() {

local url=$1

local current_status="UP" # Assume UP initially

local reason=""

# Retry Loop

for (( i=1; i<=MAX_RETRIES; i++ )); do

http_code=$(curl -s -o /dev/null -w "%{http_code}" --connect-timeout 5 --max-time 10 "${url}")

curl_exit_code=$?

if [ $curl_exit_code -ne 0 ]; then

current_status="DOWN"

reason="Connection Error. cURL exit code: ${curl_exit_code}."

elif [ "${http_code}" -ge 200 ] && [ "${http_code}" -lt 400 ]; then

current_status="UP"

reason="HTTP Status Code: ${http_code}."

break # Success, no need to retry

else

current_status="DOWN"

reason="HTTP Status Code: ${http_code}."

fi

# If we are not on the last retry, wait before trying again

if [ "$current_status" == "DOWN" ] && [ $i -lt $MAX_RETRIES ]; then

log "INFO: Check for ${url} failed (Attempt ${i}/${MAX_RETRIES}). Retrying in ${RETRY_DELAY}s..."

sleep ${RETRY_DELAY}

fi

done

# State Comparison Logic

last_status=$(get_last_status "${url}")

# If no previous status, default to UP to avoid alerts on first run

last_status=${last_status:-"UP"}

echo "Site: ${url} | Current Status: ${current_status} | Last Known Status: ${last_status}"

if [ "${current_status}" == "DOWN" ] && [ "${last_status}" == "UP" ]; then

# It just went down! Send an alert.

log "STATE_CHANGE: ${url} has gone DOWN. Reason: ${reason}"

send_alert "${url}" "${reason}" "DOWN"

update_status "${url}" "DOWN"

elif [ "${current_status}" == "UP" ] && [ "${last_status}" == "DOWN" ]; then

# It just recovered! Send a recovery alert.

log "STATE_CHANGE: ${url} has RECOVERED. Reason: ${reason}"

send_alert "${url}" "${reason}" "RECOVERY"

update_status "${url}" "UP"

elif [ "${current_status}" != "${last_status}" ]; then

# This handles the initial run case where a site is UP and last_status is empty

update_status "${url}" "${current_status}"

else

# Status has not changed, just log it quietly

log "INFO: Status for ${url} remains ${current_status}."

fi

}

# Main Script Logic

# Ensure state and log directories exist

mkdir -p "$(dirname "${LOG_FILE}")"

mkdir -p "$(dirname "${STATE_FILE}")"

if [ ! -f "${CONFIG_FILE}" ]; then

echo "Error: Configuration file not found at ${CONFIG_FILE}"

exit 1

fi

log "Monitor script started"

while IFS= read -r site || [[ -n "$site" ]]; do

if [[ -n "$site" ]]; then

check_site "$site"

fi

done < "${CONFIG_FILE}"

log "Monitor script finished"How to Run and What to Expect

Run the script as before: ./monitor.sh

Expected Screen Output:

Site: https://google.com | Current Status: UP | Last Known Status: UP

Site: https://github.com | Current Status: UP | Last Known Status: UP

Site: https://httpstat.us/503 | Current Status: DOWN | Last Known Status: UP

Site: https://non-existent-domain-12345.com | Current Status: DOWN | Last Known Status: UPKey Changes:

Sourcing .env: The script now loads the variables from your .env file.

send_alert function: A modular function to handle different alert methods. The case statement makes it easy to add more (like Telegram or slackl).

Improved Logic: The script now specifically alerts on status codes >= 400.

Phase 4: The AI Integration

This is the “expert” level. When a site is down, we’ll ask an AI what the error code means and what to do about it.

Update .env:

Uncomment and add your OPENAI_API_KEY.

Final monitor.sh with AI:

#!/bin/bash

# Load environment variables from .env file

if [ -f .env ]; then

export $(grep -v '^#' .env | xargs)

fi

# Configuration

CONFIG_FILE="websites.conf"

LOG_FILE="logs/monitor.log"

# Set to "true" to enable AI analysis on failures

AI_ANALYSIS_ENABLED="true"

# Functions

log() {

# Log to both console and file

echo "$(date '+%Y-%m-%d %H:%M:%S') - $1" | tee -a "${LOG_FILE}"

}

get_ai_suggestion() {

local url=$1

local error_code=$2

local error_type=$3 # "HTTP" or "Connection"

if [ "${AI_ANALYSIS_ENABLED}" != "true" ] || [ -z "${OPENAI_API_KEY}" ]; then

echo "AI analysis disabled or API key not set."

return

fi

log "Querying AI for analysis on ${url} (${error_type} ${error_code})..."

if [ "${error_type}" == "HTTP" ]; then

prompt="A website monitor check for the URL '${url}' failed with HTTP status code ${error_code}. As a DevOps expert, briefly explain what this status code likely means in one sentence and suggest 2-3 immediate, practical troubleshooting steps in a bulleted list."

else

prompt="A website monitor check for the URL '${url}' failed with a cURL connection error (exit code ${error_code}). As a DevOps expert, briefly explain what this type of error likely means (e.g., DNS, firewall) in one sentence and suggest 2-3 immediate, practical troubleshooting steps in a bulleted list."

fi

# Construct the JSON payload for the OpenAI API

json_payload=$(cat <<EOF

{

"model": "gpt-3.5-turbo",

"messages": [{"role": "user", "content": "${prompt}"}],

"temperature": 0.5,

"max_tokens": 150

}

EOF

)

# Use curl to call the API and jq to parse the response

response=$(curl -s -X POST https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer ${OPENAI_API_KEY}" \

-d "${json_payload}")

# Extract the content, remove leading/trailing quotes and newlines

suggestion=$(echo "${response}" | jq -r '.choices[0].message.content' | sed 's/\\n/\n/g' | sed 's/^"//' | sed 's/"$//')

if [ -n "${suggestion}" ] && [ "${suggestion}" != "null" ]; then

echo -e "\n\n* AI DevOps Assistant Suggestion:*\n${suggestion}"

else

echo "Could not retrieve AI suggestion. API response: ${response}"

fi

}

send_alert() {

local subject=$1

local body=$2

log "Sending alert: ${subject}"

case "${ALERT_METHOD}" in

"slack")

if [ -z "${SLACK_WEBHOOK_URL}" ]; then log "ERROR: SLACK_WEBHOOK_URL is not set."; return; fi

payload="{\"blocks\":[{\"type\":\"section\",\"text\":{\"type\":\"mrkdwn\",\"text\":\"*${subject}*\"}},{\"type\":\"section\",\"text\":{\"type\":\"mrkdwn\",\"text\":\"${body}\"}}]}"

curl -s -X POST -H 'Content-type: application/json' --data "${payload}" "${SLACK_WEBHOOK_URL}" > /dev/null

;;

*)

log "ALERT (Logged): Subject: ${subject} - Body: ${body}"

;;

esac

}

check_site() {

local url=$1

http_code=$(curl -s -o /dev/null -w "%{http_code}" --connect-timeout 5 --max-time 10 "${url}")

curl_exit_code=$?

if [ $curl_exit_code -ne 0 ]; then

subject="🚨 Website Down: ${url}"

body="Failed to connect to the site (cURL exit code: ${curl_exit_code}). This could be a DNS or network issue."

ai_suggestion=$(get_ai_suggestion "${url}" "${curl_exit_code}" "Connection")

send_alert "${subject}" "${body}${ai_suggestion}"

elif [ "${http_code}" -ge 400 ]; then

subject="🚨 Website Alert: ${url}"

body="The site is responding with a non-success HTTP status code: *${http_code}*."

ai_suggestion=$(get_ai_suggestion "${url}" "${http_code}" "HTTP")

send_alert "${subject}" "${body}${ai_suggestion}"

else

log "SUCCESS: ${url} is UP. Status code: ${http_code}."

fi

}

# Main Script Logic

if [ ! -f "${CONFIG_FILE}" ]; then log "Error: Configuration file not found at ${CONFIG_FILE}"; exit 1; fi

log "--- Monitor script started ---"

while IFS= read -r site || [[ -n "$site" ]]; do

if [[ -n "$site" ]] && [[ ! "$site" =~ ^# ]]; then

check_site "$site"

fi

done < "${CONFIG_FILE}"

log "--- Monitor script finished ---"Key AI-related Changes:

get_ai_suggestion function: This is the core of the AI feature.

It takes the URL and error code as input.

It crafts a specific, role-based prompt for the AI (“As a DevOps expert…”). This is called prompt engineering.

It uses a heredoc (cat <<EOF…) to create the JSON payload for the OpenAI API, which is a clean way to handle multi-line strings.

It calls the API using curl.

It uses jq to parse the JSON response and extract the AI’s message.

Integration: The check_site function now calls get_ai_suggestion on failure and appends the AI’s response to the alert body before sending it.

Phase 5: Automation with Cron

The final step is to automate the script to run periodically.

Open your crontab for editing: crontab -e

Add a line to run the script. This example runs it every 5 minutes.

IMPORTANT: Use absolute paths in your cron job to avoid issues.

# Run the website monitor every 5 minutes */5 * * * * /path/to/your/project/website-monitor/monitor.shFind the absolute path by navigating to your website-monitor directory and running pwd.

The cron job will run in the background. The script’s output (from tee) and logs will be saved to your logs/monitor.log file.

Final Project Review

You now have a complete, end-to-end monitoring solution that demonstrates expert-level shell scripting and modern DevOps principles.

Modular: Functions for logging, alerting, and checking make the code clean and reusable.

Configurable: URLs and secrets are managed outside the script.

Robust: It handles different types of failures (connection vs. HTTP errors).

Automated: cron ensures it runs continuously without manual intervention.

Intelligent: AI integration provides valuable, context-aware troubleshooting advice directly in the alert, saving engineers time during an outage.

This project is an excellent foundation. You can expand it by adding more alert methods, checking for specific content on a page, or measuring website response times.

For more information about Job Notifications, Open-source Projects, DevOps and Cloud project, please stay tuned TechCareerHubs official website.